Data Science Project Management Best Practices: How to Deliver Success

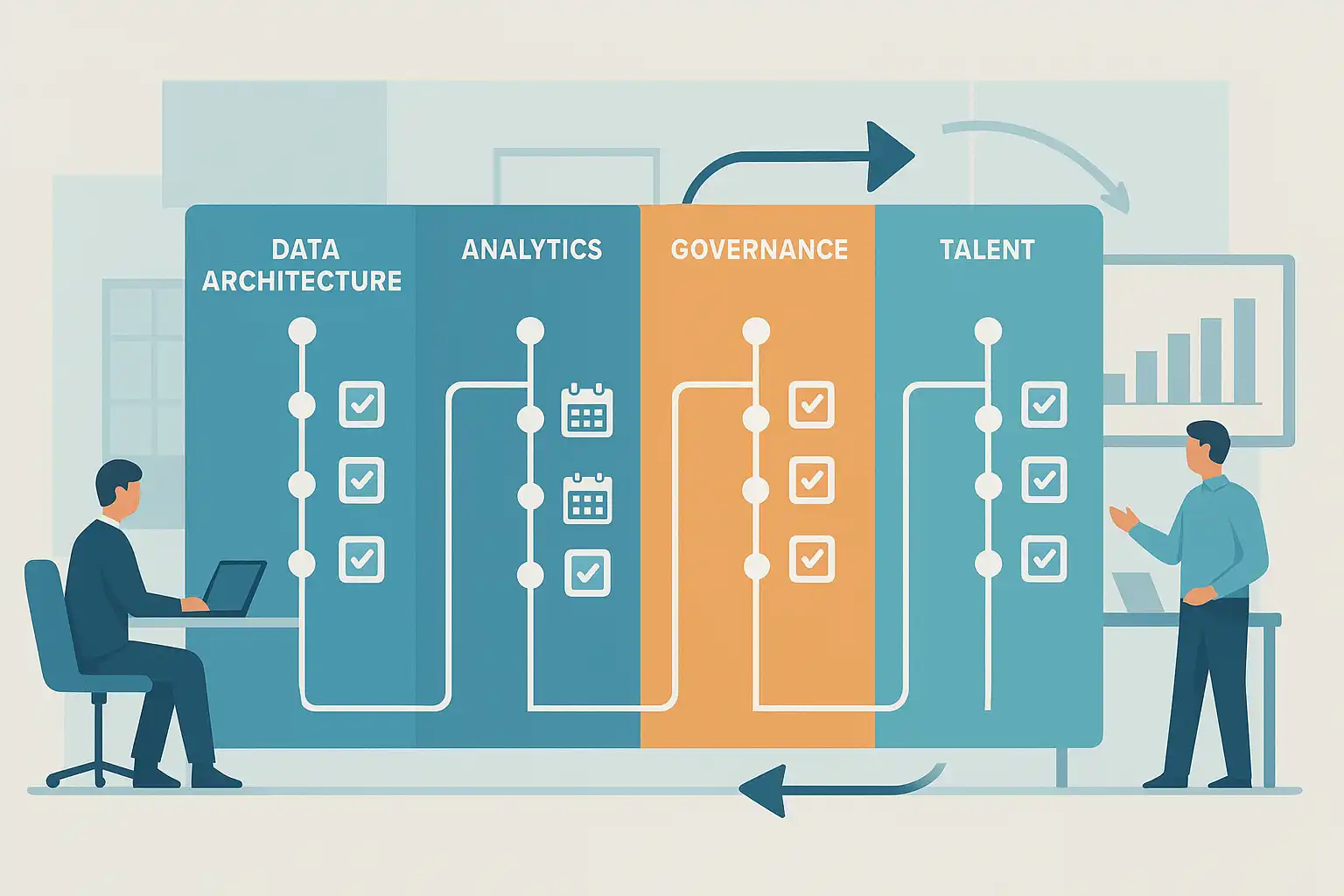

Data science project management is crucial for delivering successful projects on time and within budget, addressing unique challenges like complex data, evolving models, and uncertain outcomes. It requires clear goal-setting, effective communication, and collaboration between technical and business teams. Key project stages include problem definition, data collection, preparation, modeling, evaluation, deployment, and ongoing monitoring. Best practices emphasize proper planning, assembling diverse teams, adopting iterative agile methods, ensuring data quality, maintaining clear communication, managing risks, thorough documentation, ethical compliance, deployment readiness, and continuous improvement. Utilizing tools like Jira, Trello, Git, and Jupyter enhances project organization and collaboration. Avoid common pitfalls such as vague objectives, poor data quality, overengineering, neglecting deployment, and inadequate communication. Success relies on aligning projects with business goals, involving end-users, iterative learning, and measuring both technical and business impacts. Balancing flexibility with structure through combined agile and traditional methods ensures adaptability without losing control. Regular plan updates and strong project management roles help maintain focus and value delivery. These practices benefit organizations of all sizes, enabling data science initiatives to generate actionable insights and real business impact effectively.