What Is Data Science Workflow? Steps, Tips, and Tools Explained

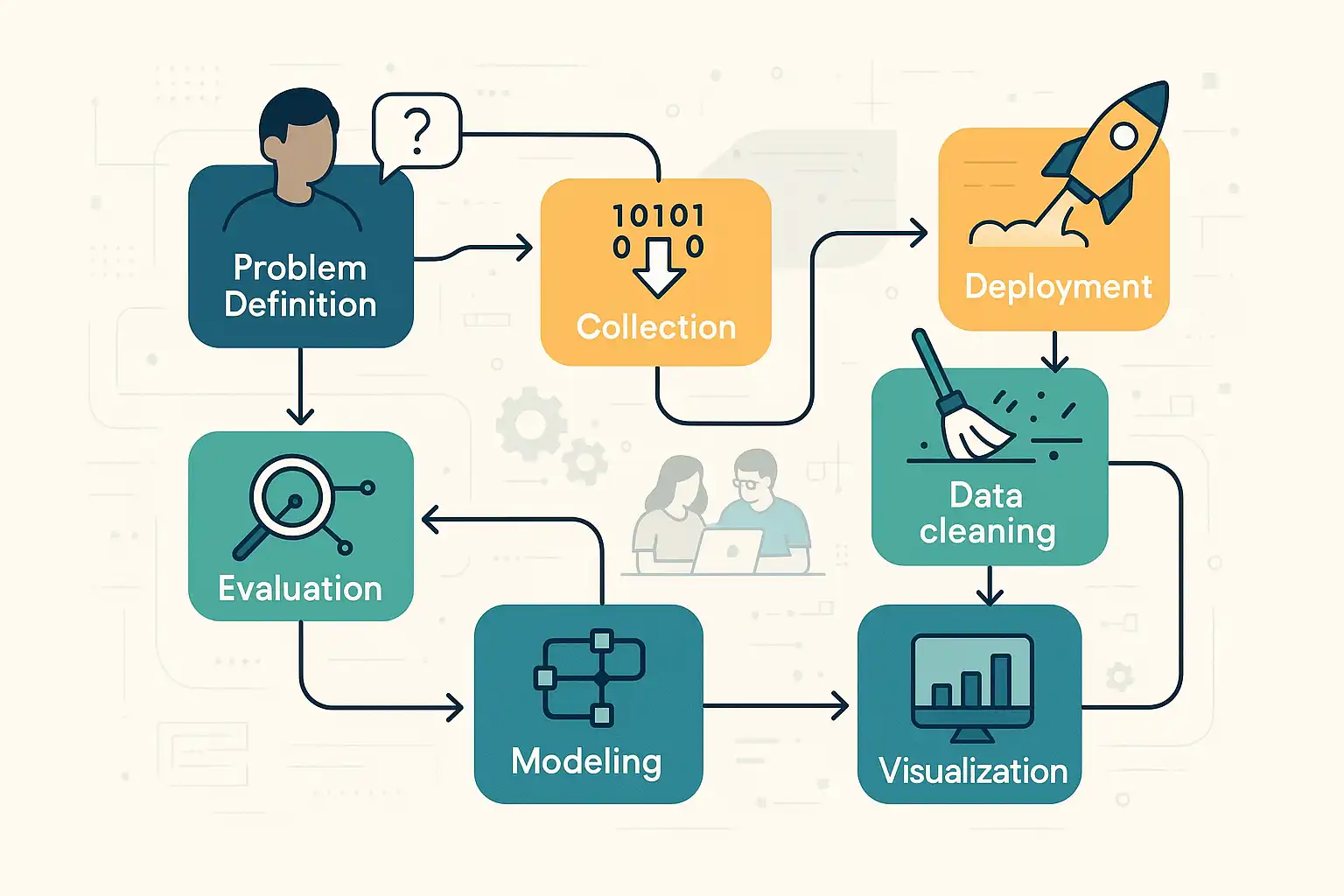

A data science workflow is a structured, step-by-step process guiding data projects from defining a problem to deploying solutions. Essential for organizing teams and ensuring repeatable, reliable results, it typically involves these phases: defining the problem, collecting data, cleaning/preparing data, exploring/analyzing, model building, evaluating results, communicating findings, and deployment/monitoring. Popular frameworks like CRISP-DM, OSEMN, and the Harvard Data Science Workflow provide templates, but many organizations customize workflows for their needs. Key tools for each stage include brainstorming and project management apps, SQL, Python, R, Jupyter Notebooks, machine learning libraries (scikit-learn, TensorFlow), and deployment platforms (MLflow, Kubeflow). Best practices—such as starting with clear objectives, thorough documentation, regular communication, validating assumptions, embracing iteration, and ongoing monitoring—ensure efficient, trustworthy outcomes. Choosing the right workflow depends on team expertise, project complexity, and stakeholder needs. Adapting frameworks with team input, piloting on small projects, and maintaining transparency maximize impact. While data pipelines automate data movement, workflows encompass the entire project life cycle. Following a robust data science workflow supports collaboration, minimizes errors, and helps new data scientists learn best practices.