Data Science Project Management Template for Teams: A Practical Guide

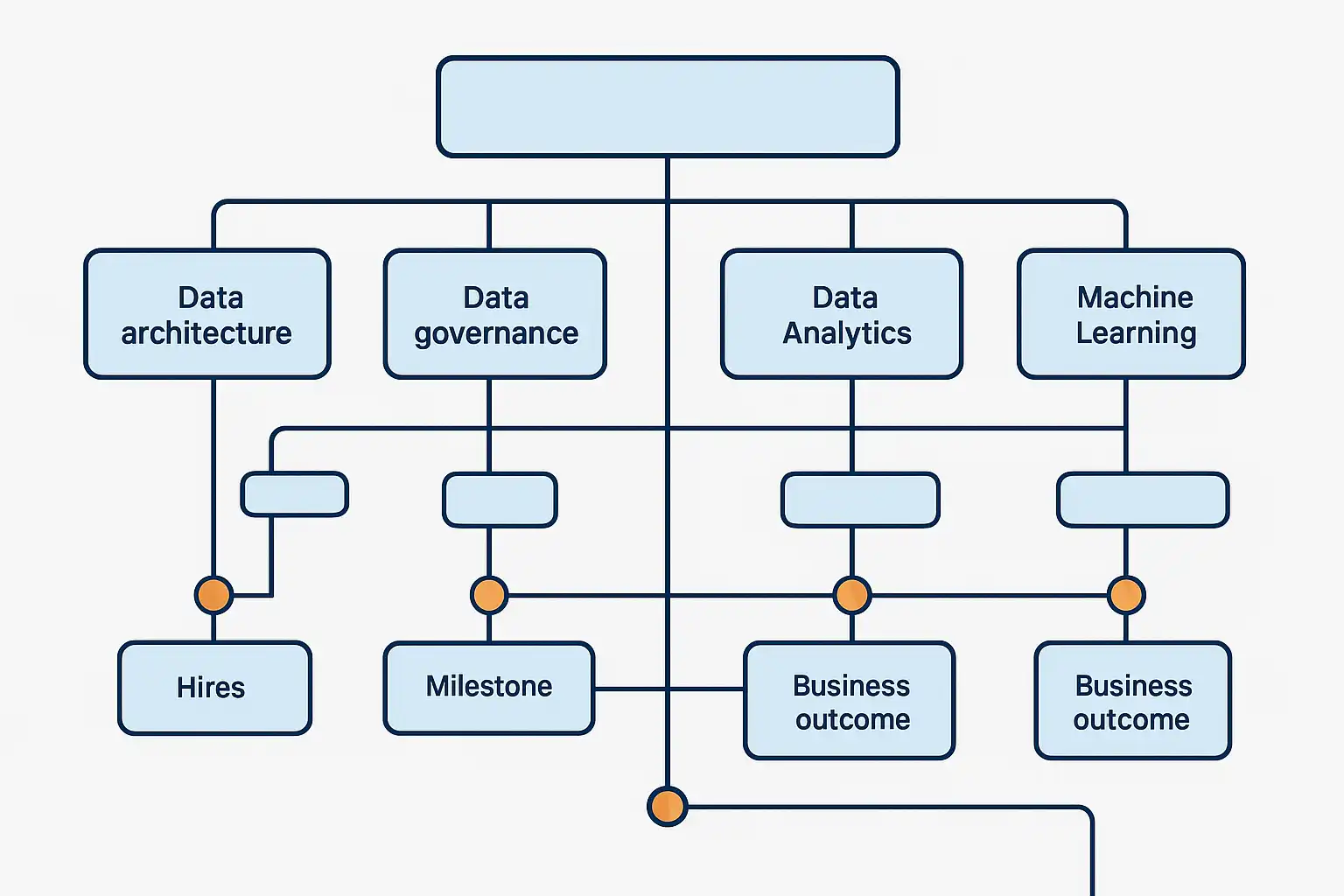

This practical guide explains the importance of using a data science project management template to streamline team workflows, improve communication, and enhance project outcomes. A well-designed template organizes key stages such as project overview, data collection, exploratory data analysis, modeling, evaluation, deployment, documentation, roles, timelines, and risk management. Templates can range from simple checklists to integrated tools in platforms like Trello, Asana, Jira, Notion, or specialized data science environments like Dataiku and Azure ML Studio. Implementing a template involves selecting the right tool, customizing it to fit team needs, assigning responsibilities, and regularly updating it. Benefits include improved structure, consistency, onboarding, and progress tracking, while drawbacks may involve rigidity or over-documentation if not maintained. Customization is essential to address specific project or industry requirements. Common pitfalls include neglecting communication, overcomplicating templates, and ignoring updates. Both large teams and solo practitioners benefit from templates that balance detail and usability. Regular reviews ensure continuous improvement. Overall, adopting tailored data science project management templates boosts team productivity, accountability, and successful delivery of complex data projects.